Many years ago, I fell in love with the idea of computer-generated art. While 3D rendering is fun, geometric art is what I love the most. One of my first programming projects was a computer generated Spirograph, which was a lot of fun to create.

It’s been a few years since making anything like this, but in the past year, I taught myself Go (which is a great language, Rust BTFO). Go is excellent for parallel processing, since it has concurrency primitives built in, and it has a lot of web technologies built right in, such as a fully functioning web server. Go has gained a lot of attention by folks that are writing distributed and scalable applications. Successful infrastructure projects such as Docker, Kubernetes, Prometheus, and Terraform are all written in Go, and if it’s good enough for these guys, it’s probably good enough for anything I’ll be making in the next few years.

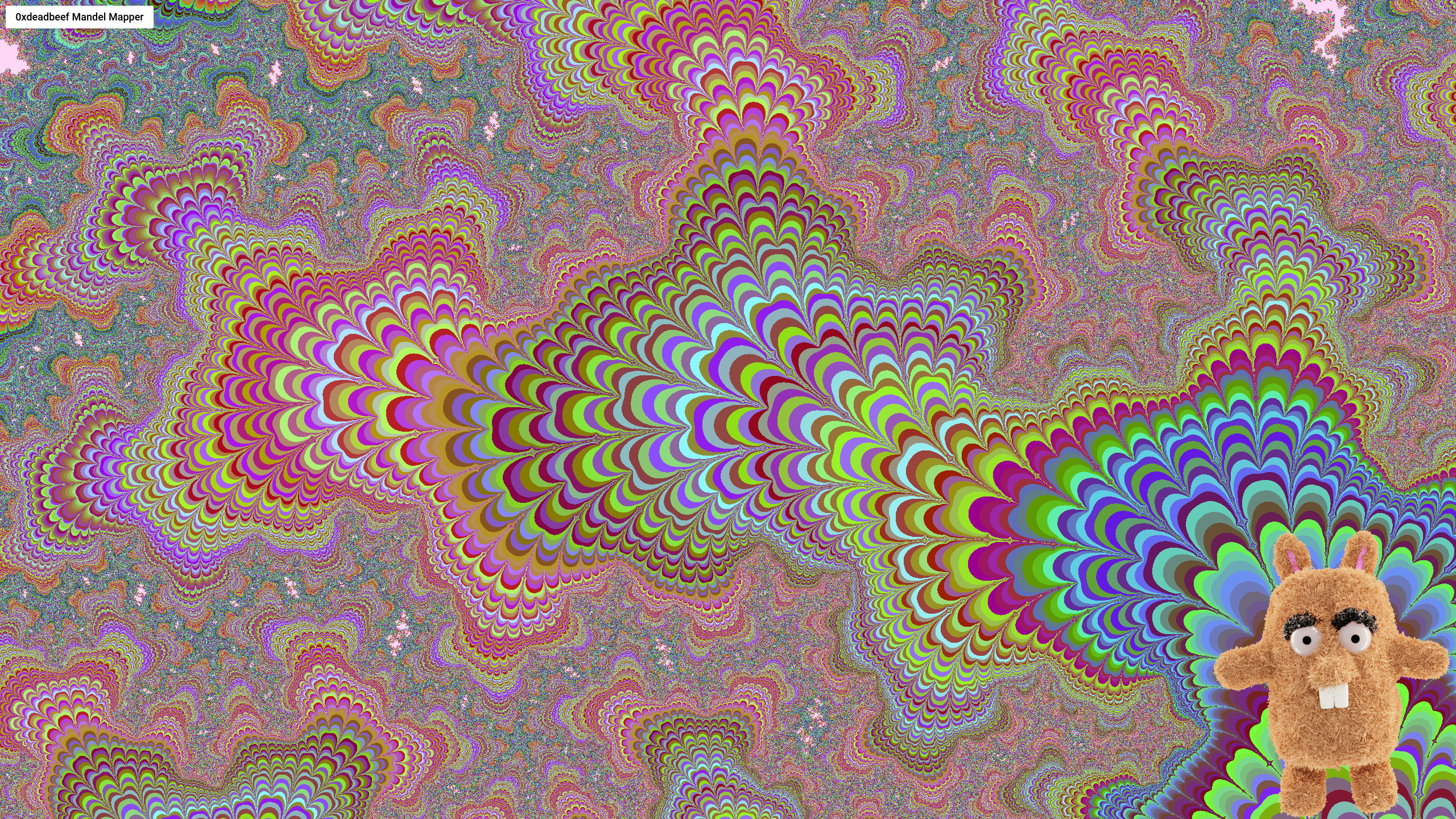

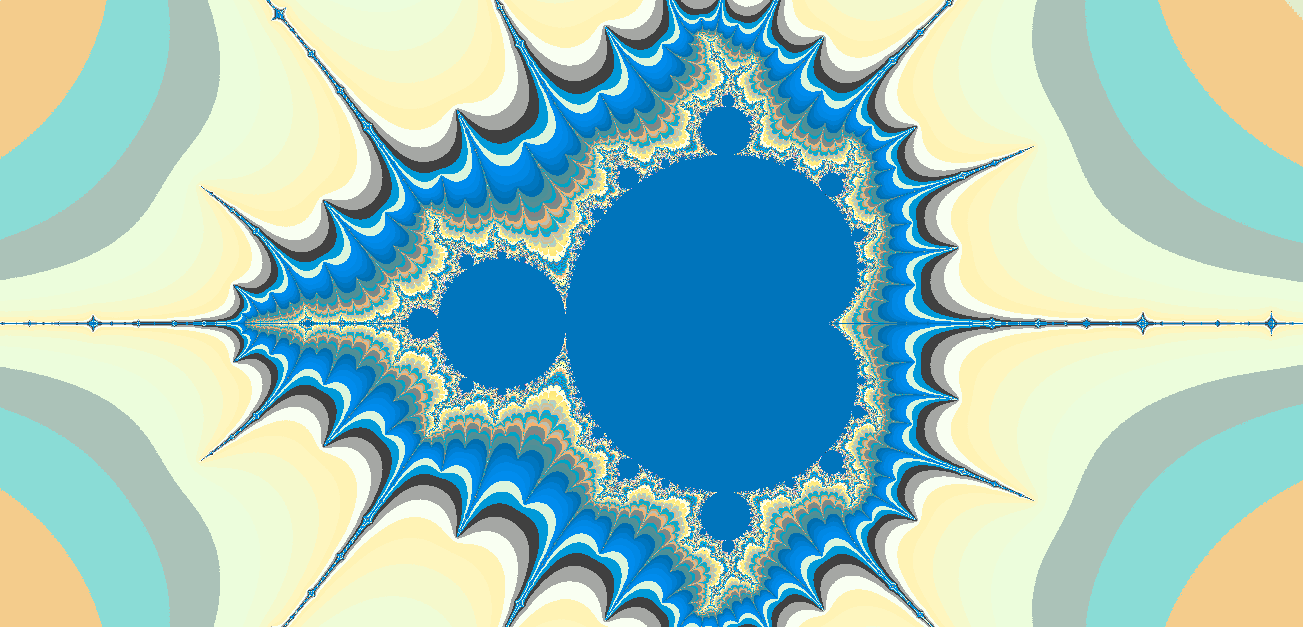

I wanted to make a scalable application that both demonstrated my knowledge of distributed systems, but also something that produced some appealing output. Going back to my love for computer art, I decided I wanted to make a distributed fractal renderer. I’d already recently created a Mandelbrot set renderer in Go and had some pretty cool results, but all it would do is write an image to a file.

In its current state, it wasn’t concurrent, wasn’t scalable and wasn’t web-accessible. I wasn’t sure how I was going to solve any of those problems yet, but started looking at projects that had to solve similar problems (showing an ungodly massive image and being able to zoom in). I came across this niche project that you probably haven’t heard of - Google Maps. The Google Maps Javascript API could potentially help us with two of the three remaining problems - scalability and web-accessibility. Google Maps Javascript API breaks the full image up into tiles with three dimensions:

- Zoom Level

- X coordinate

- Y coordinate

I figured I could make a service which draws out the requested tile; each instance of the service would also try and split the problem up and generate the tile using concurrency. I overcame this by making each pixel render in its own Go routine. The front end would request all the tiles it requires to draw the full screen, each tile would be a separate request which gets routed to multiple instances of the backend service behind a load balancer. Each service would then render the specific tile requested concurrently. This architecture would allow for complete linear scalability and utilize the maximum computing power available.

The application was now in a state that it can be placed behind a load balancer and be replicated across multiple nodes, but there’s still a few problems:

- How will we package the application?

- Where will the application package be stored?

- How will we load balance incoming traffic?

- How will we deploy the application from its package?

Docker solves two problems, packaging the build artifacts and hosting the image. Kubernetes, along with Traefik as an ingress controller, handles the load balancing and scheduling across physical nodes. This creates a complete system which scales well. Currently, I have it running on a sixteen node Raspberry Pi 3 B+ cluster. Fun fact, it’d take approximately 2000TB to render and store all the tiled images this application can produce.

Some future areas that I may begin exploring are interactions between the services and the k8s ingress controller to dynamically adjust weighting depending on various factors such as TTFB in the response. This could allow distributing the load to unrelated machines (for instance, my desktop (faster clock speed) and my rpi cluster (more threads, but slow single thread performance)). I haven’t yet spent too much time on interactions between k8s and the services it runs, but clearly something that could be of interest to production workloads and infrastructure - for instance sharing loads between on prem and scaling to cloud if required.