Machine learning allows you to take expert judgment, bake it into a mathematical model which can be used to solve similar, but new and different problems.

In this short video clip, I highlighted the fact that with little AI development experience, it’s entirely possible to use this technology to build a useful application that solves a real problem. This is a growing field because the potential for return on investment is essentially uncapped at this point. I spent approximately 20 hours self-teaching myself high-level concepts, terminology, understanding how various hyperparameters can influence results, and reviewing the various high-level libraries and components you can utilize that abstract away all the mathematics behind machine learning. I then gave an example of a business problem-solving application that assigns a quantified priority to incoming work, replacing a manual process that typically requires a human.

In this article, I dive deeper into how this technology is going to change how we interact with computers and applications.

Human-Computer-Interfaces

An interface is any means of which we can interact with something. When we have a discussion with another person, we are interfacing with them. When we make a phone call, we are interfacing with both the telephone, all the communications equipment in between phones, as well as the other person at the end of the line.

When I say voice commands to a computer, use a keyboard and mouse or touch the screen on my phone, we are interfacing with it, and that’s how we provide input to the computer, and it’s how we “speak” to the computer. When a computer displays something on the screen, plays sounds or otherwise does something that my senses pick up on, it’s providing input to me, and that’s how we “listen” to or “watch” the computer, during which we are also interfacing with it. There is input (speaking) we must provide, and there is output (listening/watching) which we may receive.

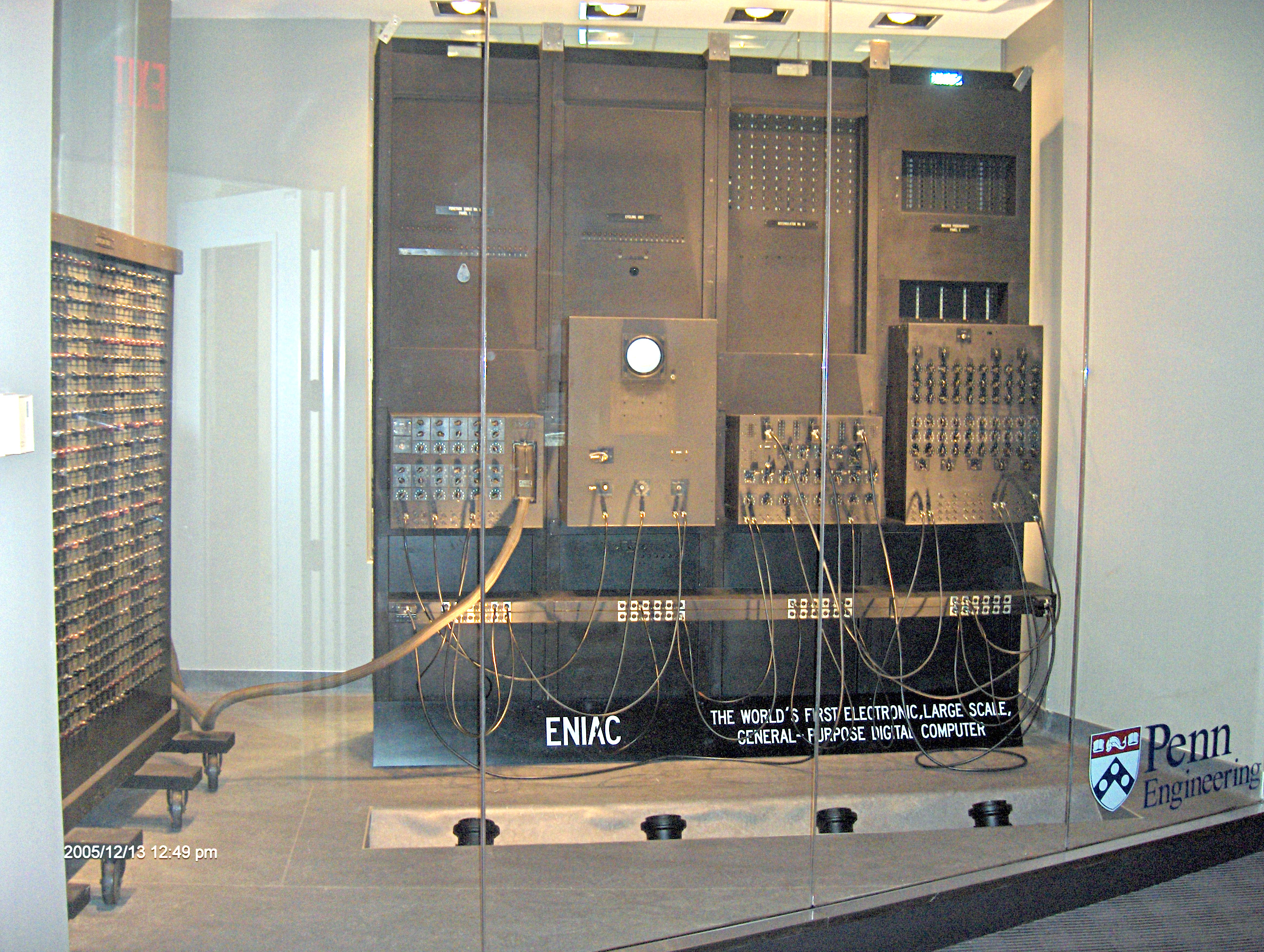

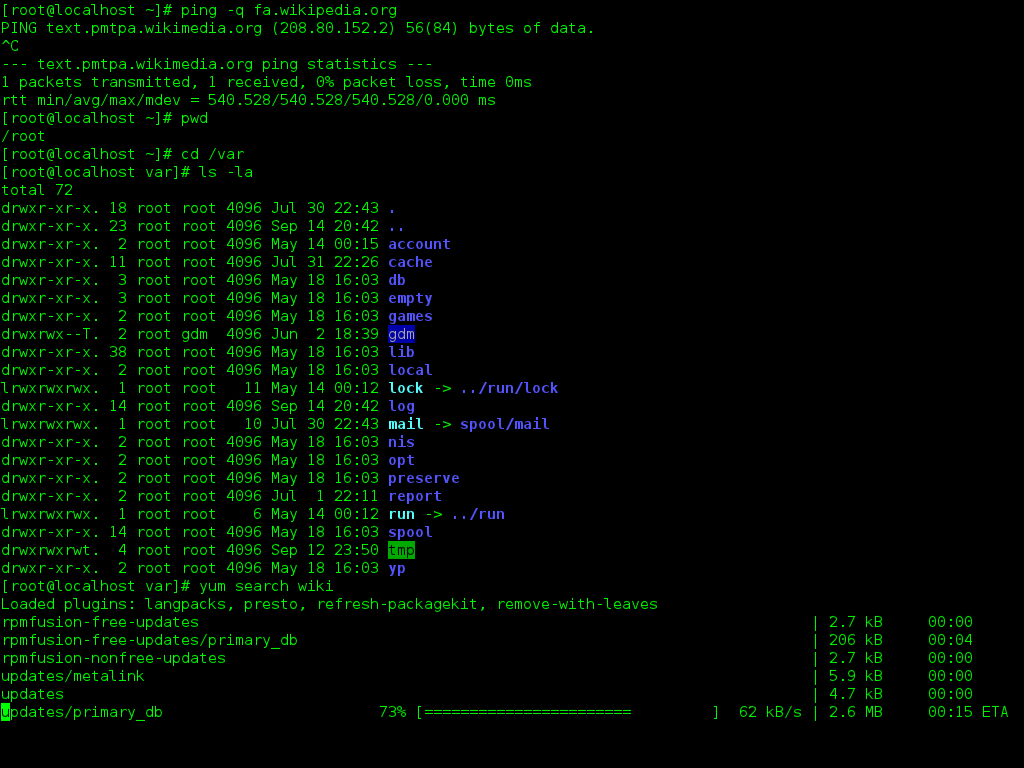

In the past, we used to interface with computers by moving cables around, turning various knobs and dials, and it required specialized knowledge to operate a machine at all. The output would then be printed out on a sheet of paper for the user to read. Eventually, a CLI (Command Line Interface) became a common place where you could type in commands to a computer. These commands, while they were English words, they’re a language of their own and still required specialized knowledge and took time for a user to become productive with the system.

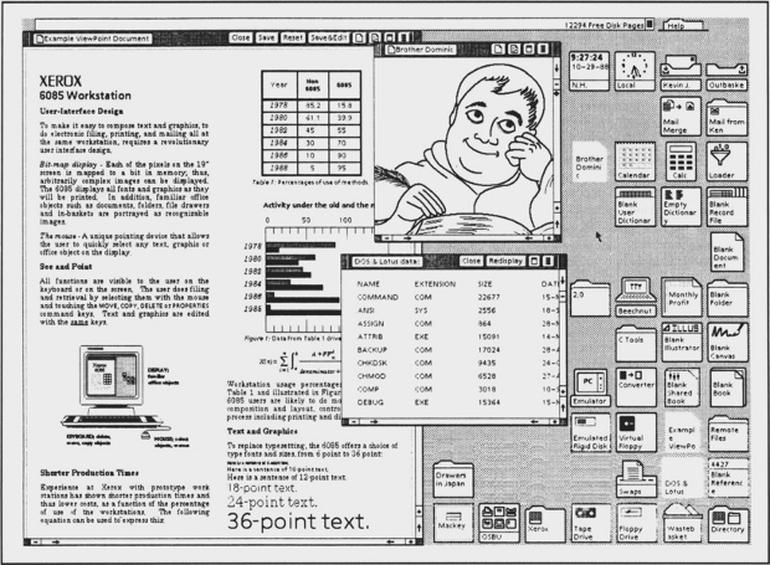

Then video displays came, we no longer needed to print everything on a piece of paper. Finally, the GUI and the mouse came about and provided an additional layer of abstraction. This provided better discoverability, literally displaying the actions the user can take on the screen. Anyone who can move their arm and push a button was quickly able to interface with a computer. The effects and intent behind what each button on the GUI does is still very limiting and requires hardcoding procedures or processes for each action the user wants to perform.

Satisficing

There are a million problems out there in which we’ve satisficed, that is to say - we have solutions out there, and they’ve been “good enough” so far. We’ve had incremental improvements here or there to these solutions, but nothing that’s really a game changer (a solution in a completely different category and unthought of return on investment compared to the older strategy). The problem with this is that there are in fact better solutions out there compared to whatever it is we’re using. These solutions are are known and acknowledged, but they are not used. Why? The reason is we’ve invested into our current solutions and to change would be expensive. These better solutions are better, but not by enough to justify the switch. With machine learning now having countless applications, it’s pairing up with a lot of existing and better solutions and simply adding enough extra benefit to put them above the prohibitive cost thresholds.

Machine learning solutions will likely replace a lot of the existing solutions we have to problems today. For example, in Prediction Machines: The Simple Economics of Artificial Intelligence, the authors repeatedly referenced a solution to a problem I would never have thought to be related to a machine learning application. As services such as Google Maps and many others have better and better predictions on traffic data, you can better plan your trip by leaving home at the time that you’re statistically likely to arrive at the airport and not miss your flight. This service may render airport lounges, obsolete, or at least really harm the value they provide to passengers. Airport lounges were created for wealthier and business individuals to relax and enjoy themselves as they’d arrive on the airport earlier than required to ensure they did not miss their flight. Predictive road traffic analytics is making this much less of a problem. Additionally predictive analytics on flight traffic and logistics may eliminate airport lounges entirely. The book talks about a lot of similar examples which make you start to think more about how machine learning will impact industry - so I highly recommend you check it out.

The Next Evolution of Interfaces

It’s machine learning. Here’s the thing - this stuff used to be expensive to implement and didn’t have the greatest of results. It used to require large teams of highly educated individuals even to have a remote chance of success. Today, it doesn’t - and this is a very recent development.

The next evolution of providing input to a computer revolves around the leaps and bounds machine learning has made in the field of Natural Language Processing (NLP). NLP is the field of modeling human languages programmatically. It had historically relied on strictly defining the syntax of human languages, such as subjects, verbs, nouns, grammar, sentence structure, conversational context, and other facets of natural language. New developments in Machine Learning have enabled programs to have a much better understanding of language than the old hardcoded methods, and the techniques are so much better that there’s no real competition between the two. It’s like the difference between the old automated phone systems that tried to route your call, but they never understood you, and today’s Amazon Alexa, Google Assistant, and Apple’s Siri. What this means is that we can expect a lot more natural language input types in the future. These will be seen in the form of speaking or typing what it is that you want to do, and the computer will map your input to the function that best matches what it is you want to do.

The next evolution of outputs is nearly impossible to list because it is so vast, but it essentially boils down to “anything that can reasonably be predicted given examples of what it is you want to predict from the past.” An example used in my video above was categorizing a description of an IT problem into the correct priority category (Low or High priority).

Getting Started with Machine Learning

Machine learning is now widely available to use for pretty much anyone that has a computer and an internet connection. Google has kindly offered AutoML, at the time of this writing, as a free service for under 30,000 classifications (or problems solved) per month - that’s a lot for free. In the next few articles, I’m going to go over how to get started with Google AutoML for problems that have text and image inputs, although to be honest, the experience is ubiquitous that they’re pretty much the same.

Next

Part 2 - AutoML Text for the Average Human - A brief overview of how anyone can start using machine learning now to predict various outcomes based on text input.